Title: “A model-independent general search for new phenomena with the ATLAS detector at √s=13 TeV”

Author: The ATLAS Collaboration

Reference: ATLAS-PHYS-CONF-2017-001

When a single experimental collaboration has a few thousand contributors (and even more opinions), there are a lot of rules. These rules dictate everything from how you get authorship rights to how you get chosen to give a conference talk. In fact, this rulebook is so thorough that it could be the topic of a whole other post. But for now, I want to focus on one rule in particular, a rule that has only been around for a few decades in particle physics but is considered one of the most important practices of good science: blinding.

In brief, blinding is the notion that it’s experimentally compromising for a scientist to look at the data before finalizing the analysis. As much as we like to think of ourselves as perfectly objective observers, the truth is, when we really really want a particular result (let’s say a SUSY discovery), that desire can bias our work. For instance, imagine you were looking at actual collision data while you were designing a signal region. You might unconsciously craft your selection in such a way to force an excess of data over background prediction. To avoid such human influences, particle physics experiments “blind” their analyses while they are under construction, and only look at the data once everything else is in place and validated.

This technique has kept the field of particle physics in rigorous shape for quite a while. But there’s always been a subtle downside to this practice. If we only ever look at the data after we finalize an analysis, we are trapped within the confines of theoretically motivated signatures. In this blinding paradigm, we’ll look at all the places that theory has shone a spotlight on, but we won’t look everywhere. Our whole game is to search for new physics. But what if amongst all our signal regions and hypothesis testing and neural net classifications… we’ve simply missed something?

It is this nagging question that motivates a specific method of combing the LHC datasets for new physics, one that the authors of this paper call a “structured, global and automated way to search for new physics.” With this proposal, we can let the data itself tell us where to look and throw unblinding caution to the winds.

The idea is simple: scan the whole ATLAS dataset for discrepancies, setting a threshold for what defines a feature as “interesting”. If this preliminary scan stumbles upon a mysterious excess of data over Standard Model background, don’t just run straight to Stockholm proclaiming a discovery. Instead, simply remember to look at this area again once more data is collected. If your feature of interest is a fluctuation, it will wash out and go away. If not, you can keep watching it until you collect enough statistics to do the running to Stockholm bit. Essentially, you let a first scan of the data rather than theory define your signal regions of interest. In fact, all the cool kids are doing it: H1, CDF, D0, and even ATLAS and CMS have performed earlier versions of this general search.

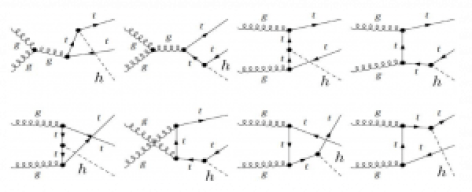

The nuts and bolts of this particular paper include 3.2 fb-1 of 2015 13 TeV LHC data to try out. Since the whole goal of this strategy is to be as general as possible, we might as well go big or go home with potential topologies. To that end, the authors comb through all the data and select any event “involving high pT isolated leptons (electrons and muons), photons, jets, b-tagged jets and missing transverse momentum”. All of the backgrounds are simply modeled with Monte Carlo simulation.

Once we have all these events, we need to sort them. Here, “the classification includes all possible final state configurations and object multiplicities, e.g. if a data event with seven reconstructed muons is found it is classified in a ‘7- muon’ event class (7μ).” When you add up all the possible permutations of objects and multiplicities, you come up with a cool 639 event classes with at least 1 data event and a Standard Model expectation of at least 0.1.

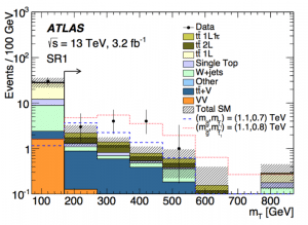

From here, it’s just a matter of checking data vs. MC agreement and the pulls for each event class. The authors also apply some measures to weed out the low stat or otherwise sketchy regions; for instance, 1 electron + many jets is more likely to be multijet faking a lepton and shouldn’t necessarily be considered as a good event category. Once this logic applied, you can plot all of your SRs together grouped by category; Figure 2 shows an example for the multijet events. The paper includes 10 of these plots in total, with regions ranging in complexity from nothing but 1μ1j to more complicated final states like ETmiss2μ1γ4j (say that five times fast.)

Once we can see data next to Standard Model prediction for all these categories, it’s necessary to have a way to measure just how unusual an excess may be. The authors of this paper implement an algorithm that searches for the region of largest deviation in the distributions of two variables that are good at discriminating background from new physics. These are the effective mass, the sum of all jet and missing momenta, and the invariant mass, computed with all visible objects and no missing energy.

For each deviation found, a simple likelihood function is built as the convolution of probability density functions (pdfs): one Poissonian pdf to describe the event yields, and Gaussian pdfs for each systematic uncertainty. The integral of this function, p0, is the probability that the Standard Model expectation fluctuated to the observed yield. This p0 value is an industry standard in particle physics: a value of p0 < 3e-7 is our threshold for discovery.

Sadly (or reassuringly), the smallest p0 value found in this scan is 3e-04 (in the 1m1e4b2j event class). To figure out precisely how significant this value is, the authors ran a series of pseudoexperiments for each event class and applied the same scanning algorithm to them, to determine how often such a deviation would occur in a wholly different fake dataset. In fact, a p0 of 3e-04 was expected 70% of the pseudoexperiments.

So the excesses that were observed are not (so far) significant enough to focus on. But the beauty of this analysis strategy is that this deviation can be easily followed up with the addition of a newer dataset. Think of these general searches as the sidekick of the superheros that are our flagship SUSY, exotics, and dark matter searches. They can help us dot i’s and cross t’s, make sure nothing falls through the cracks— and eventually, just maybe, make a discovery.