Before we dig into all the physics behind these acronyms (beyond SM physics! dark matter!), let’s start by breaking down the title.

QCD, or quantum chromodynamics, is the study of how quarks and gluons interact. CP is the combined operation of charge-parity; it swaps a particle for its antiparticle, then switches left and right. CP symmetry states that applying both operators should leave the laws of physics invariant, which is true for electromagnetism. Interestingly it is violated by the weak force (this becomes the problem of matter-antimatter asymmetry [1]). But more importantly, the strong force maintains CP symmetry. In fact, that’s exactly the problem.

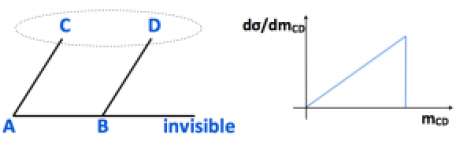

CP violation in QCD would give an electric dipole moment to the neutron. Experimentally, physicists have constrained this value pretty tightly around zero. But our QCD Lagrangian has a more complicated vacuum than first thought, giving it a term (Figure 2) with a phase parameter that would break CP [2]. Basically, our issue is that the theory predicts some degree of CP violation, but experimentally we just don’t see it. This is known as the strong CP problem.

Naturally physicists want to find a fix for this problem, bringing us to the rest of the article title. The most recognized solution is the Peccei-Quinn, or PQ theory. The idea is that the phase parameter is not a constant but actually another symmetry of the Standard Model. This symmetry, called U(1)_PQ, is spontaneously broken, meaning that all states of the theory share the symmetry except for the ground state.

This may sound a bit similar to the Higgs mechanism, because it is. In both cases, we get a non-zero vacuum expectation value and an extra massless boson, called a Goldstone boson. However, as with the Higgs mechanism, the new boson is not exactly massless. Very few things are exact in physics, and approximate symmetry breaking means our massless Goldstone boson gains a tiny bit of mass after all. This new particle created from PQ theory is called an axion. This new axion effectively steps into the role of the phase parameter, allowing its value to relax to 0.

Is it reasonable to imagine some extra massive Standard Model particle bouncing around that we haven’t detected yet? Sure. Perhaps the axion is so heavy that we haven’t yet probed the necessary energy range at the LHC. Or maybe it interacts so rarely that we’ve been looking in the right places and just haven’t had the statistics. But any undiscovered massive particle floating around should make you think about dark matter. In fact, the axion is one of the few remaining viable candidates for DM, and lots of people are looking pretty hard for it.

One of the largest collaborations is ADMX at the University of Washington, which uses an RF cavity in a superconducting magnet to detect the very rare conversion of a DM axion into a microwave photon [3]. In order to be a good dark matter candidate, the axion would have to be fairly small, and some theories place its mass below 1 eV (for reference, the neutrinos are of mass ~0.3 eV). ADMX has eliminated possible masses on the micro-eV order. However, theorists are clever, and there’s a lot of model tuning available that can pin the axion mass practically anywhere you want it to be.

Now is the time to factor in the recent buzz about a diphoton excess at 750 GeV (see the January 2016 ParticleBites post to catch up on this.) Recent papers are trying to place the axion at this mass, since that resonance is yet to be explained by Standard Model processes.

For example, one can consider aligned QCD axion models, in which there are multiple axions with decay constants around the weak scale, in line with the dark matter relic abundance [4]. The models can get pretty diverse from here, suffice it to say that there are many possibilities. Though this excess is still far from confirmed, it is always exciting to speculate about what we don’t know and how we can figure it out. Because of strong CP and these recent model developments, the axion has earned a place pretty high up on this speculation list.

References

- The Mystery of CP Violation, Gabriella Sciola, MIT

- TASI Lectures on the Strong CP Problem

- Axion Dark Matter Experiment (ADMX)

- Quality of the Peccei-Quinn symmetry in the Aligned QCD Axion and Cosmological Implications, arXiv: 1603.0209 [hep-ph].

- The Big Blog Theory on axions